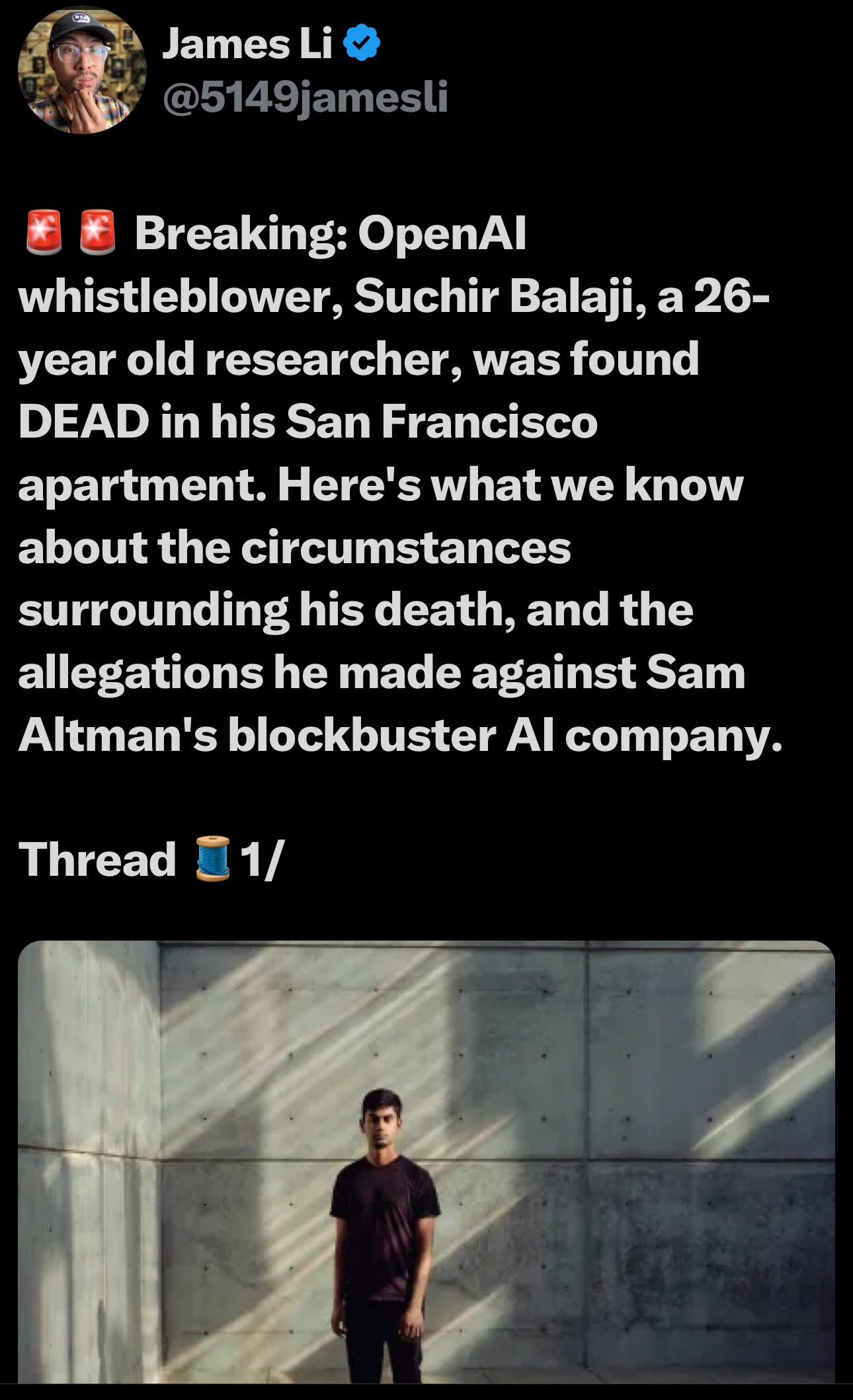

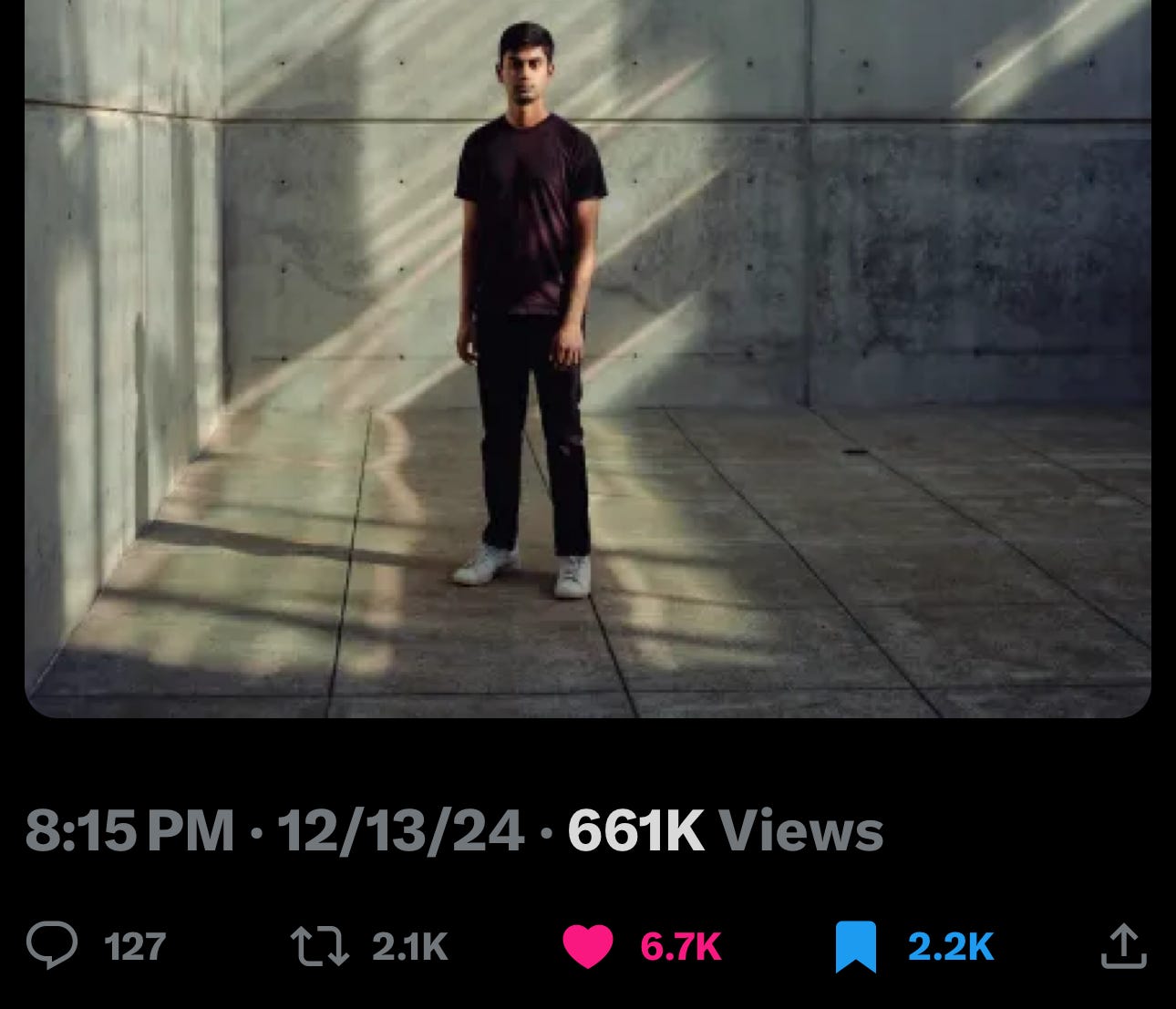

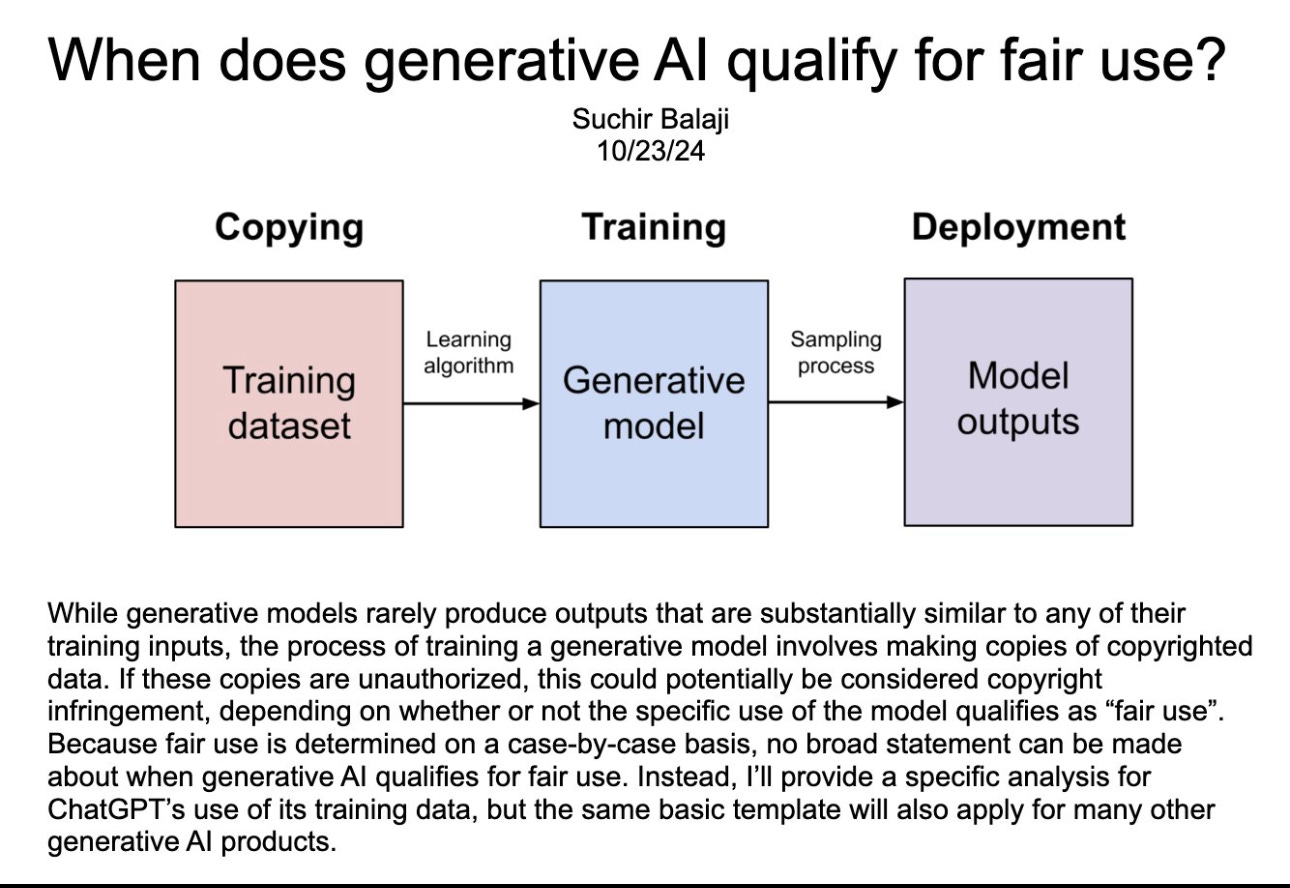

26-year Old Researcher Suchir Balaji Found Dead Three Months After Accusing AI/Chat GTP/Sam Altman of Violating U.S. Copyright Law

If they let you live, you made a deal with them

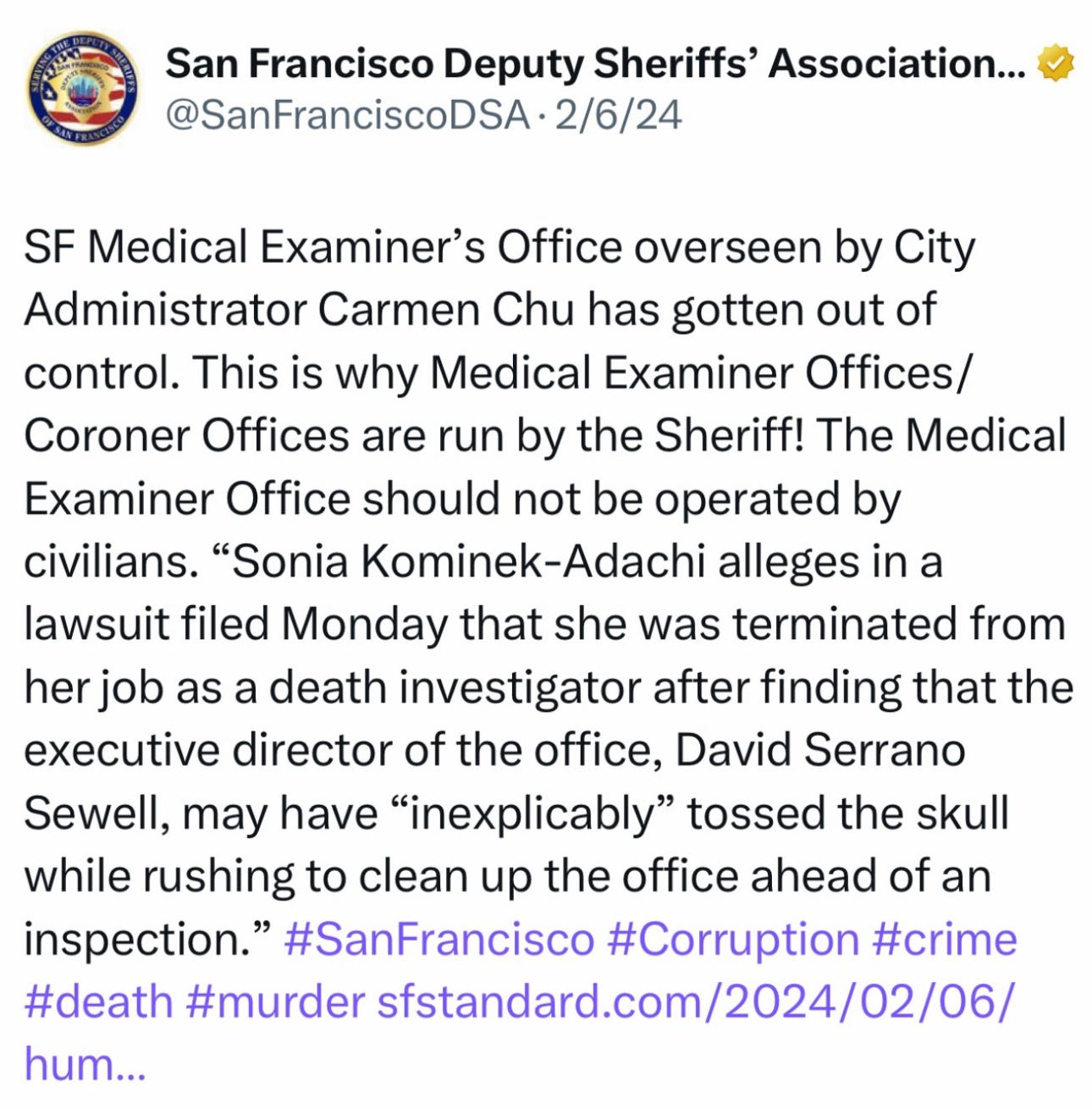

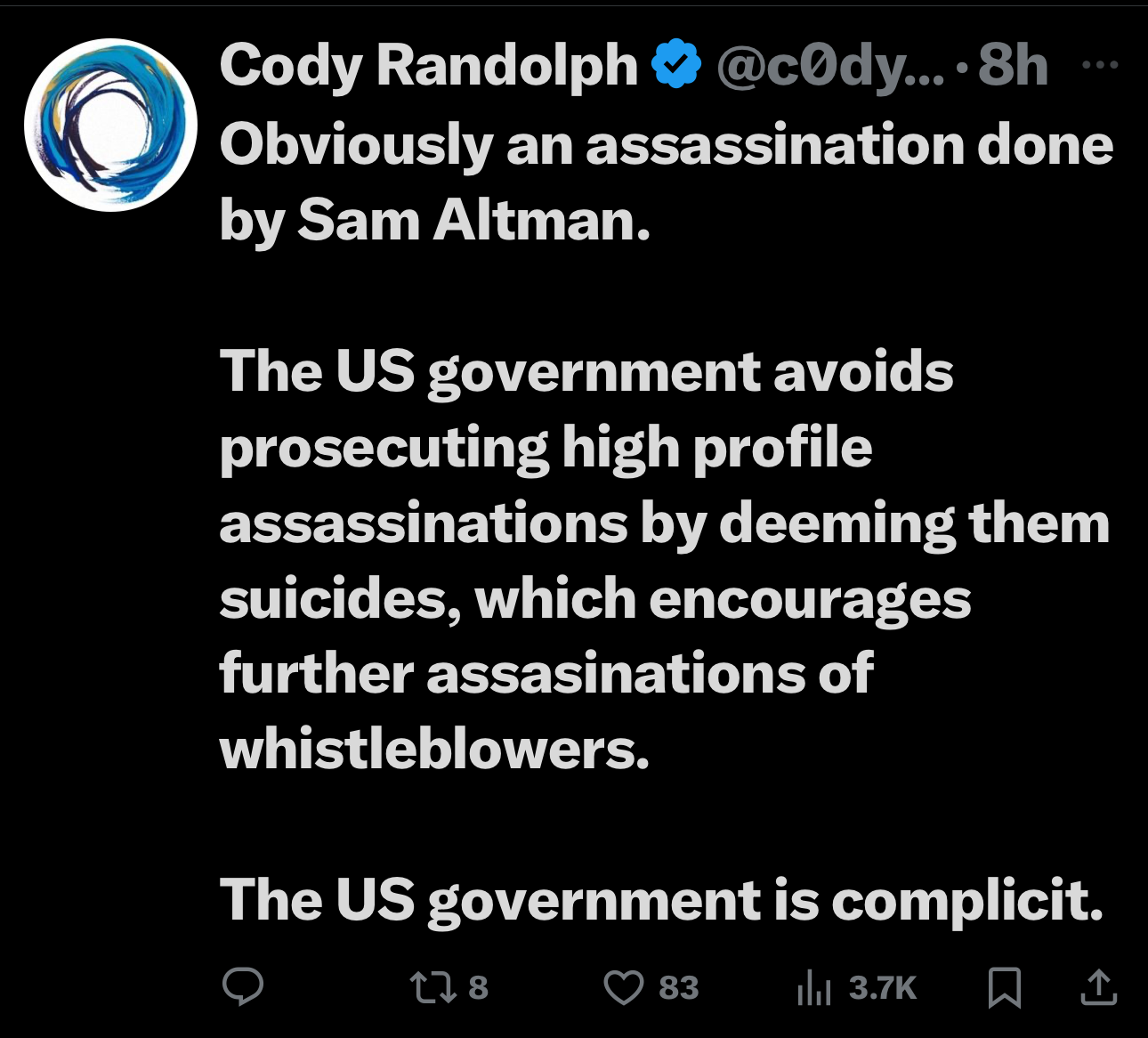

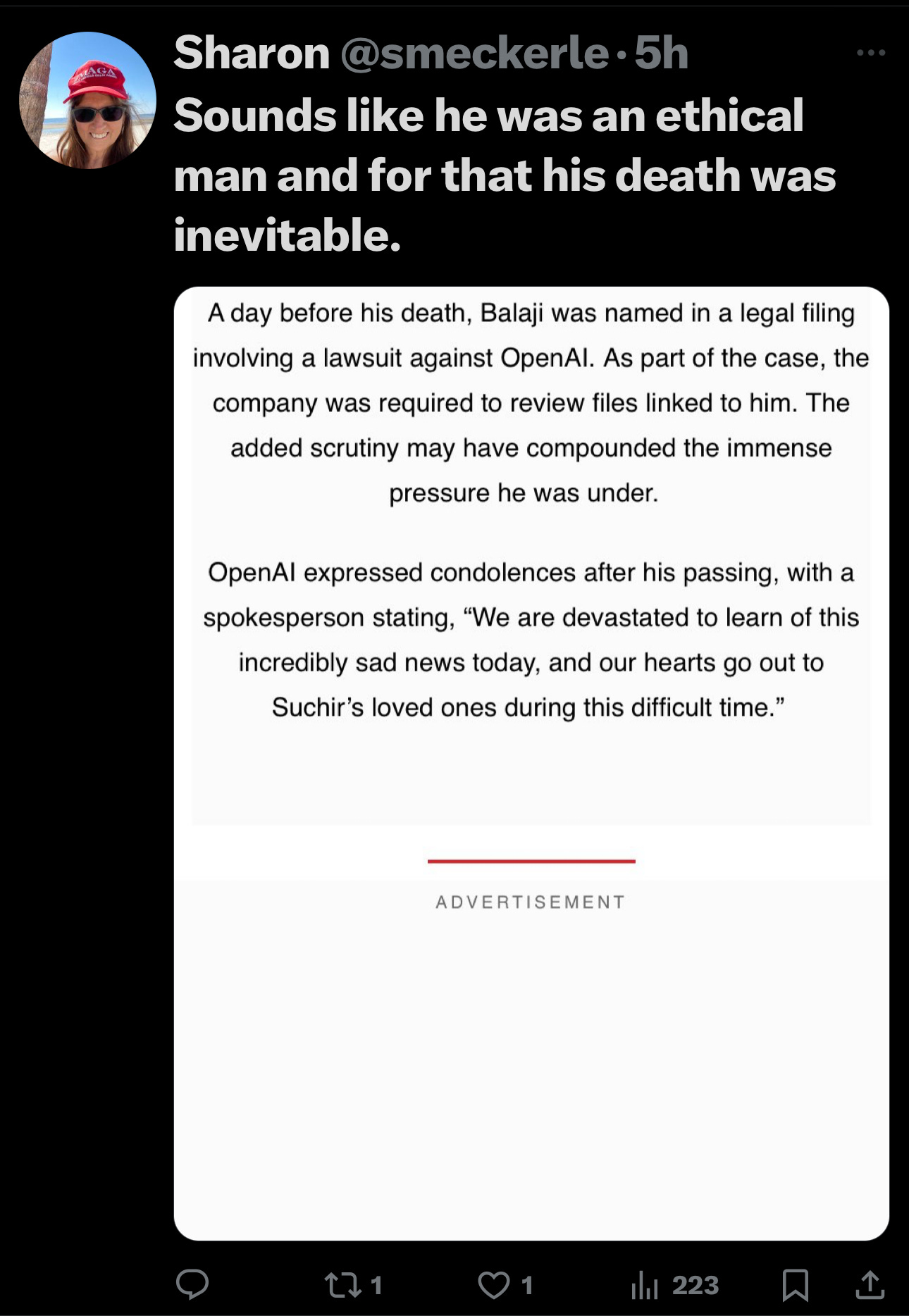

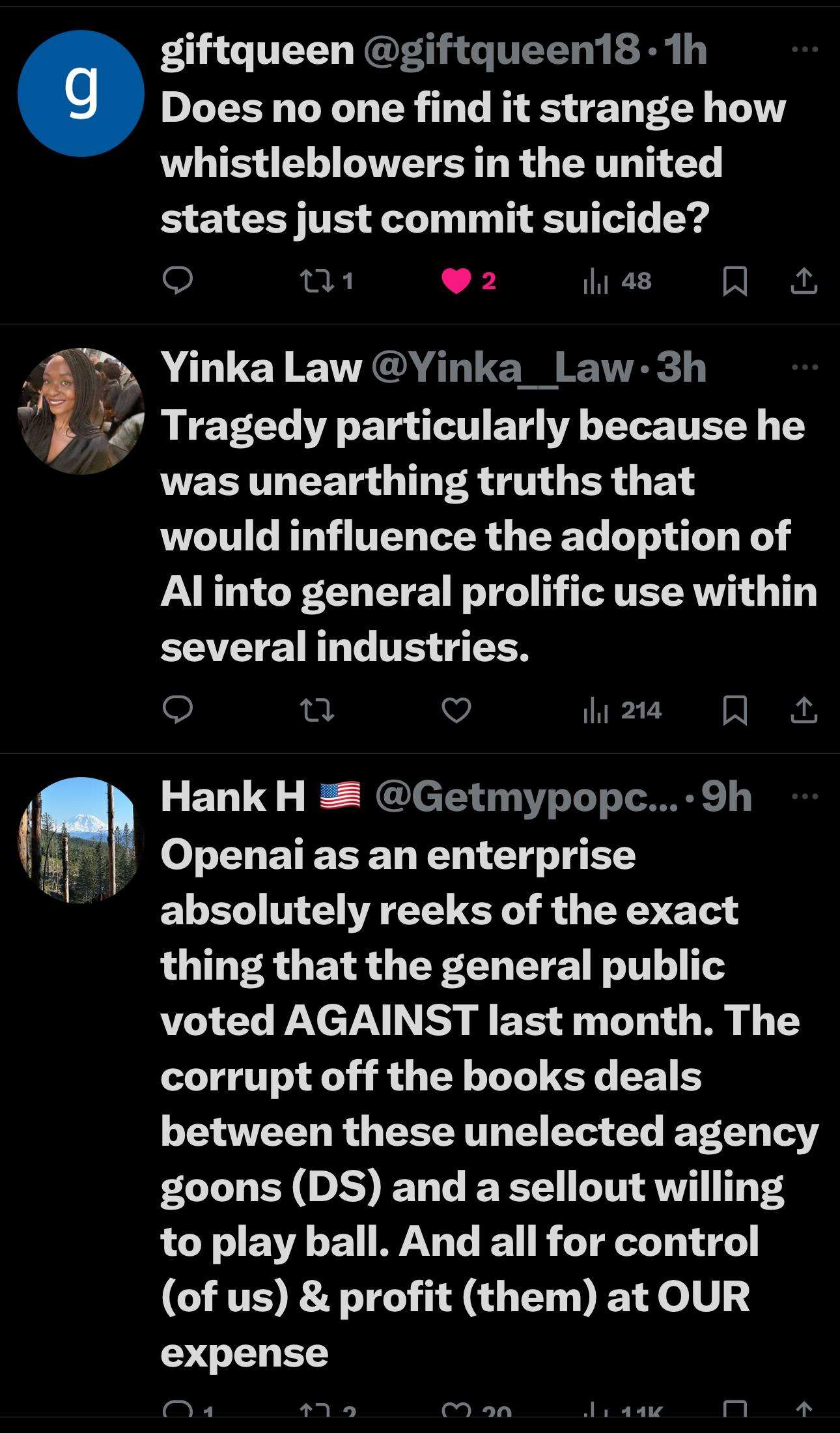

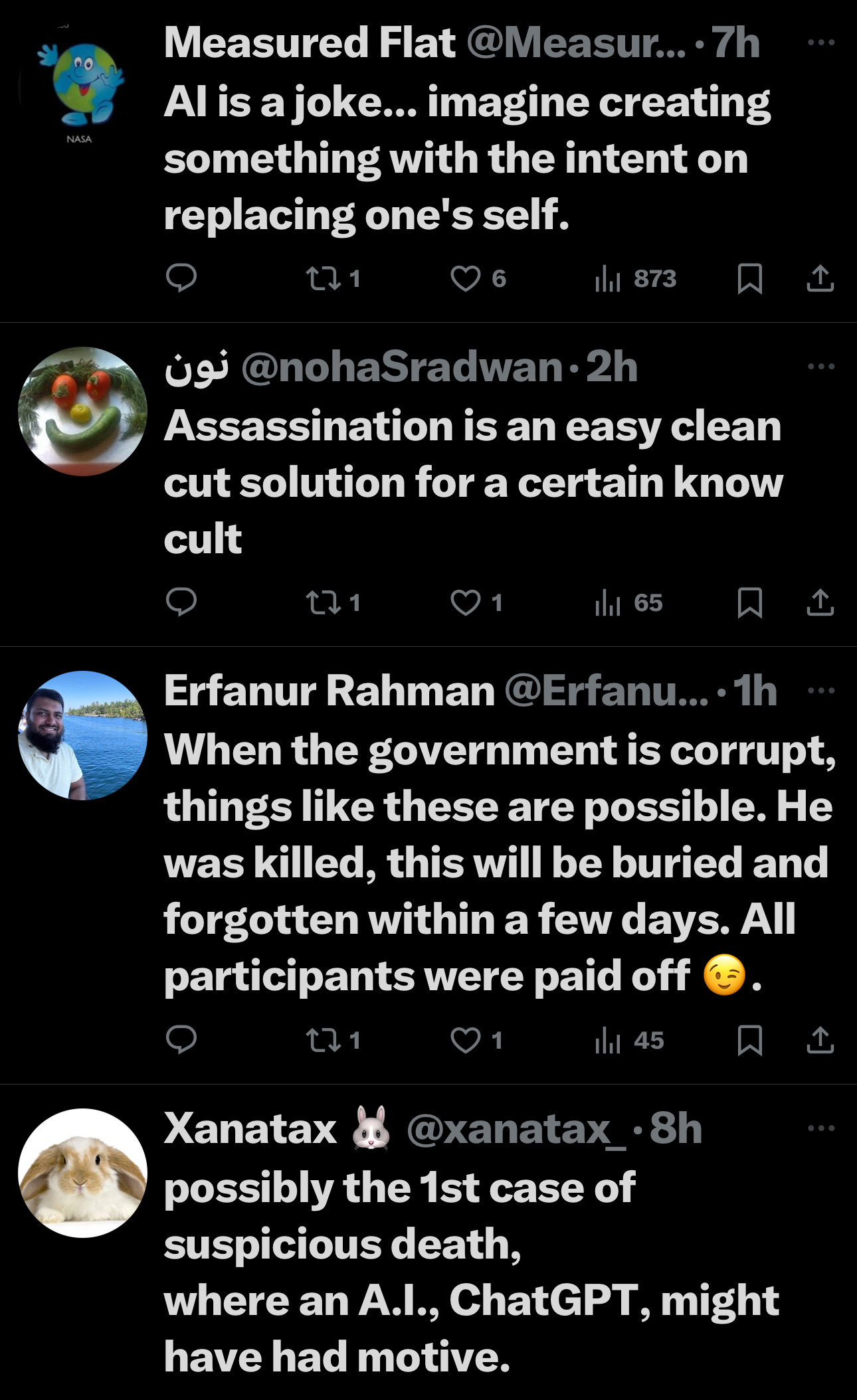

The Tweets

Source: https://x.com/5149jamesli/status/1867755258812018939?s=46

The Thread

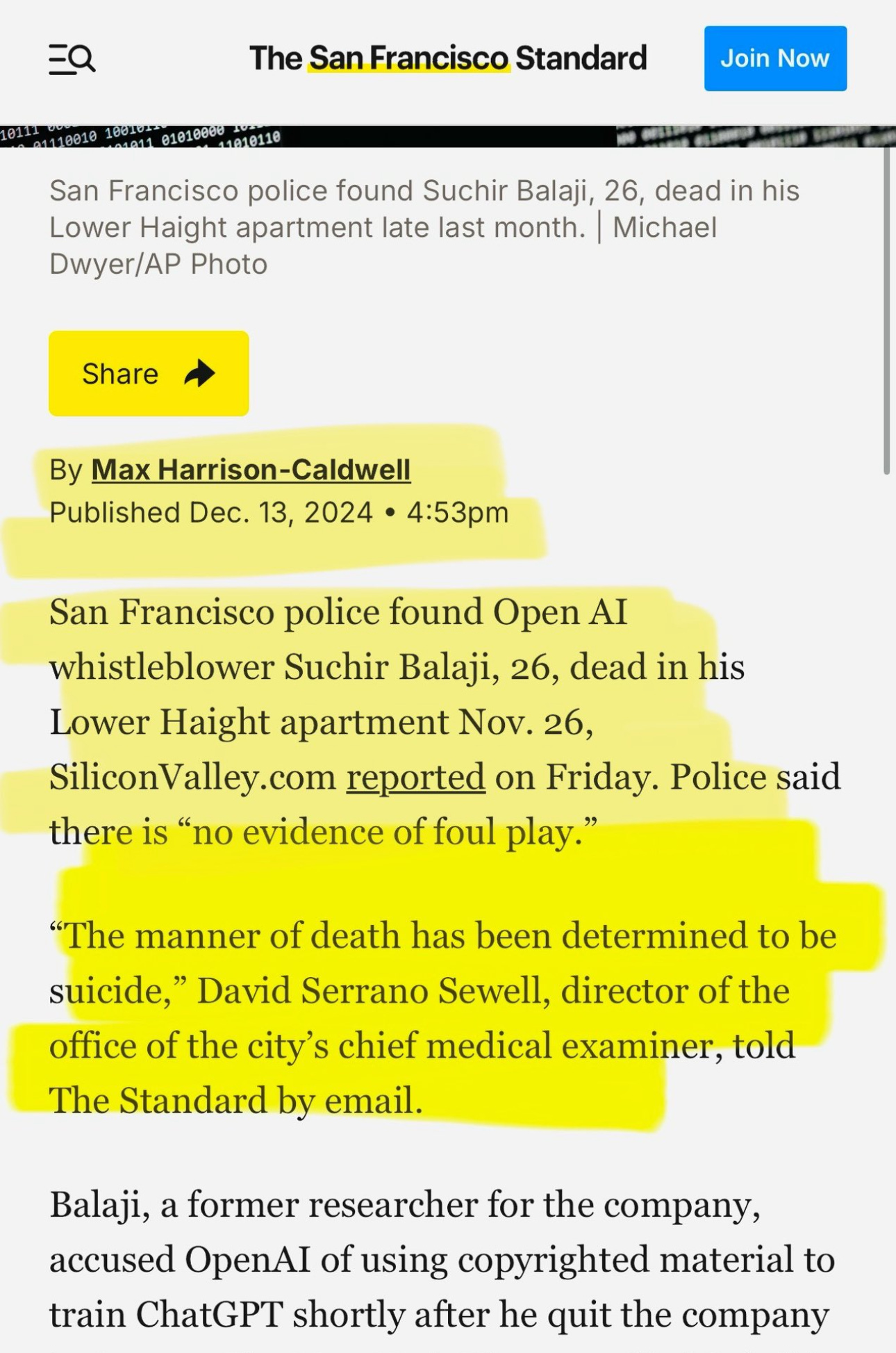

The Article

The Article

Prominent artificial intelligence research organization OpenAI recently appointed newly retired U.S. Army General and former National Security Agency (NSA) director Paul M. Nakasone to its board of directors.

Nakasone will join the Board’s newly announced Safety and Security Committee, slated to advise OpenAI’s Board on critical safety - and security-related matters and decisions.

Established following an exodus of OpenAI higher-ups concerned about the company’s perceived de-prioritization of safety-related matters, the new Safety and Security Committee is OpenAI’s apparent effort to reestablish a safety-forward reputation with an increasingly wary public.

AI safety concerns are of the utmost importance, but OpenAI should not use them to ram through an appointment that appears poised to normalize AI’s militarization while spinning the ever-revolving door between defense and intelligence agencies and Big Tech.

The ‘revolving door’ strikes again

Following his 38-year military career, including over five years heading U.S. Army Cyber Command, Nakasone’s post-retirement OpenAI appointment and shift to the corporate sector mimics the military-industrial complex’s ever-“revolving door” between senior defense or intelligence agency officials and private industry.

The phenomenon manifests itself in rampant conflicts of interest and massive military contracts alike: according to an April 2024 Costs of War report, U.S. military and intelligence contracts awarded to major tech firms had ceilings “worth at least $53 billion combined” between 2019 and 2022.

Quietly lifting language barring the military application of its tech from its website earlier this year, OpenAI apparently wants in on the cash. The company is currently collaborating with the Pentagon on cybersecurity-related tools to prevent veteran suicide.

A slippery slope

OpenAI remains adamant its tech cannot be used to develop or use weapons despite recent policy changes. But AI’s rapid wartime proliferation in Gaza and Ukraine highlights other industry players’ lack of restraint; failing to keep up could mean losing out on lucrative military contracts in a competitive and unpredictable industry.

Similarly, OpenAI’s current usage policiesaffirm that the company’s products cannot be used to “compromise the privacy of others,” especially in the forms of “[f]acilitating spyware, communications surveillance, or unauthorized monitoring of individuals.” But Nakasone’s previous role as director of the NSA, an organization infamous for illegally spying on Americans, suggests such policies may not hold water.

In NSA whistleblower Edward Snowden’s words: “There is only one reason for appointing an[NSA] Director to your board. This is a willful, calculated betrayal of the rights of every person on Earth.”

Considering the growing military use of AI-powered surveillance systems, including AI-powered reconnaissance drones and AI-powered facial recognition technology, the possible wartime surveillance implications of OpenAI’s NSA hire cannot be ruled out.

Meanwhile, OpenAI’s scandal-laden track record, which includes reportedly shoplifting actor Scarlett Johansson’s voice for ChatGPT, CEO Sam Altman’s failed ousting, and previously restrictive, often lifelong non-disclosure agreements for former OpenAI employees, remains less than reassuring.

All things considered, Nakasone’s OpenAI appointment signals that a treacherous, more militarized road for OpenAI, as well as AI as a whole, likely lies ahead.

Source: https://responsiblestatecraft.org/former-nsa-chief-revolves-through-openai-s-door/

The Latest Lawsuit

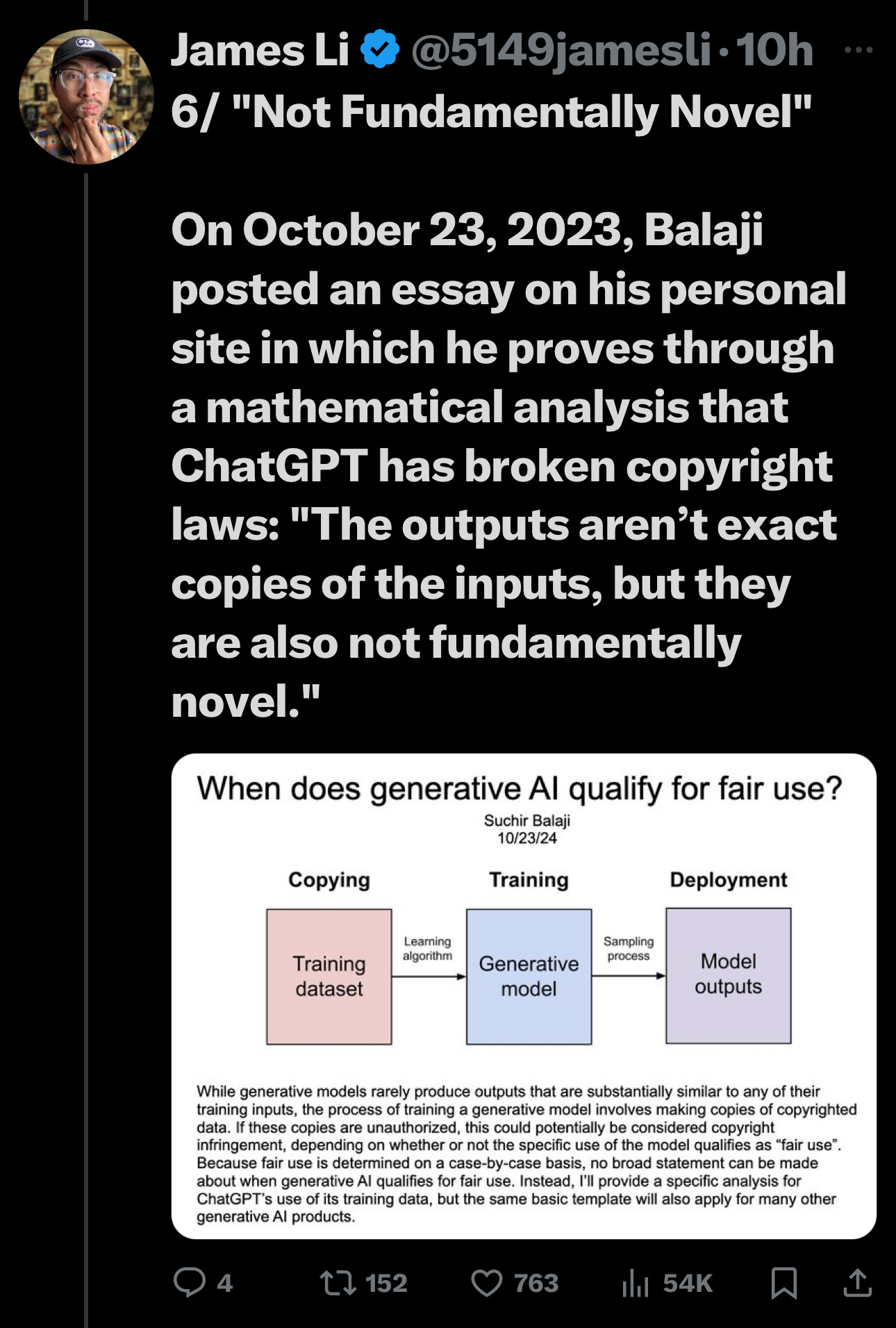

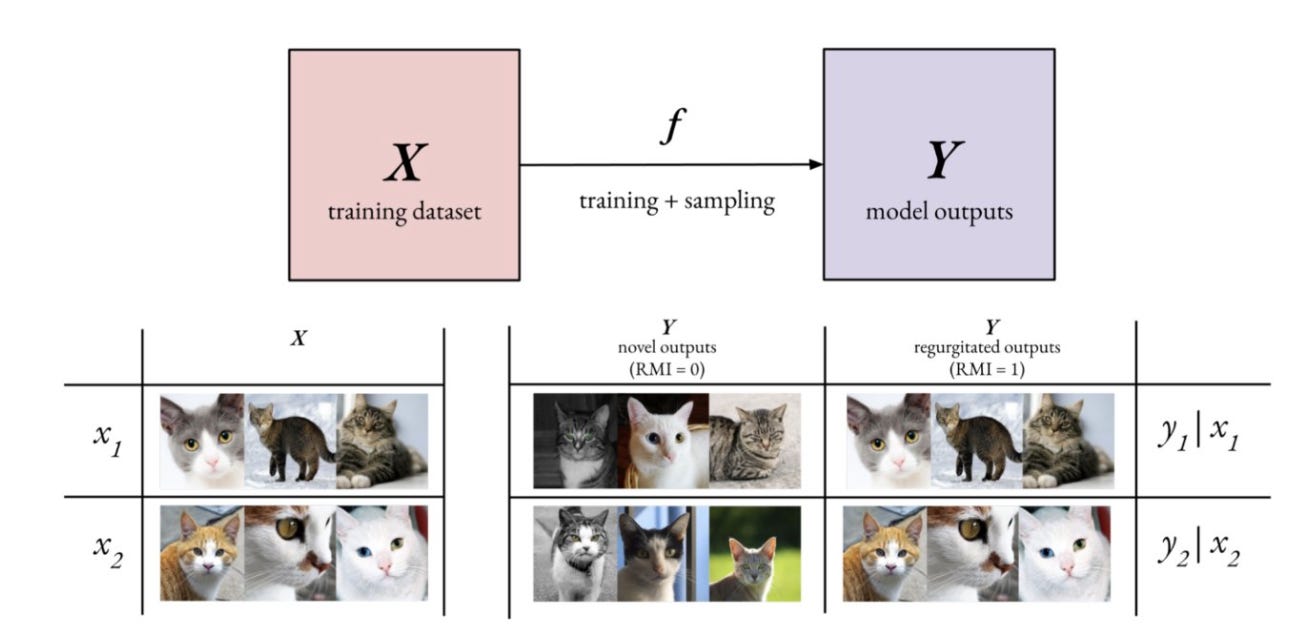

As AI continues to evolve at lightning speed, concerns about how companies train their models have been growing. OpenAI, the maker of ChatGPT, is now facing heat in Canada and has been accused of violating copyright laws by using content from news media outlets for profit.

Five major Canadian news organizations have taken legal action against OpenAI, alleging regular copyright violations and breaches of online terms of use. The lawsuit, filed on November 29, is backed by The Globe and Mail, The Canadian Press, CBC/Radio-Canada, Torstar, and Postmedia.Canada's top news outlets have joined forces to accuse OpenAI of unlawfully using their articles to train its AI models.

OpenAI regularly breaches copyright and online terms of use by scraping large swaths of content from Canadian media to help develop its products, such as ChatGPT.

The group is demanding C$20,000 (around $14,300 when directly converted) in punitive damages for every article they claim was unlawfully used to train ChatGPT. If proven, the total compensation could reach billions.

The media outlets are also pushing for OpenAI to hand over a share of the profits earned from their articles. Additionally, they're seeking a court order to block the company from using their content moving forward.

Chatbots like ChatGPT rely on publicly available online data for training, with content from newspapers often being part of this data-scraping process. OpenAI defends its methods, stating that its models are trained on publicly available information, adhering to fair use and international copyright principles meant to respect creators' rights.

This case joins a growing list of lawsuits against OpenAI and other tech companies by authors, artists, music publishers, and other copyright holders over the use of their work to train generative AI systems. Earlier this year, similar lawsuits were filed against OpenAI in the US, including cases brought by The New York Times and other media outlets.Personally, I think stricter regulations are needed to ensure tech companies don't exploit people's work or personal data when training AI models. It's a growing concern, and it's not just limited to the US and Canada. For example, the European Union has already launched an investigation into how Elon Musk's X is training its models, while Meta's AI systems, which power Facebook, Instagram, and WhatsApp's AI assistant, are still not available in the EU due to similar issues.

Source: https://www.phonearena.com/news/chatgpt-billion-dollar-lawsuit-canada_id165402

AI uses 16 times as much electricity, and pose a problem for creative writing.

I don't use AI for my writings. On Twitter/X, I ask Grok questions and it takes them from Twitter/X data.

Do you use AI! ChatGPT or Twitter’s Grok?

How many presidents did they kill?

How many high profile people suddenly committed suicide?

How many jumped from windows in Russia?

Suchir is a flea on a dog's back.

Look up Rudolf Steiners lectures on Ahriman and The Eighth Sphere.

Ai not good. I have no use for it.

Nice dig Margaret! Thank you. 🙏💖

Happy Holidays to you and yours. 🎄God be with us all.